Creating a 2M Parameter Thinking LLM from Scratch Using Python

The world of language models has often been associated with massive, resource-intensive systems like GPT-4 or PaLM, boasting billions of parameters and trained on enormous datasets. However, a new wave of small yet capable language models is gaining attention — models like OpenChat's o3 or DeepSeek-R1. These lightweight models have fewer than 2 million parameters yet demonstrate surprising competence in reasoning and general-purpose tasks.

In this blog post, we'll explore how you can build a 2M parameter "thinking" LLM entirely from scratch, using Python and open-source tools — without requiring a supercomputer.

Why Build a Small Language Model?

While massive LLMs capture headlines, small models come with their own powerful advantages:

- ✅ Fast Training – Small models can be trained in hours instead of days.

- ✅ Lower Costs – You don’t need expensive GPUs or cloud infrastructure.

- ✅ Deploy Anywhere – Perfect for edge devices, mobile apps, or offline use.

- ✅ Customizable – You can fine-tune for specific use cases without large datasets.

Most importantly, they offer an excellent learning opportunity. You’ll understand the inner workings of transformers, attention mechanisms, and training dynamics — all while building a usable AI system.

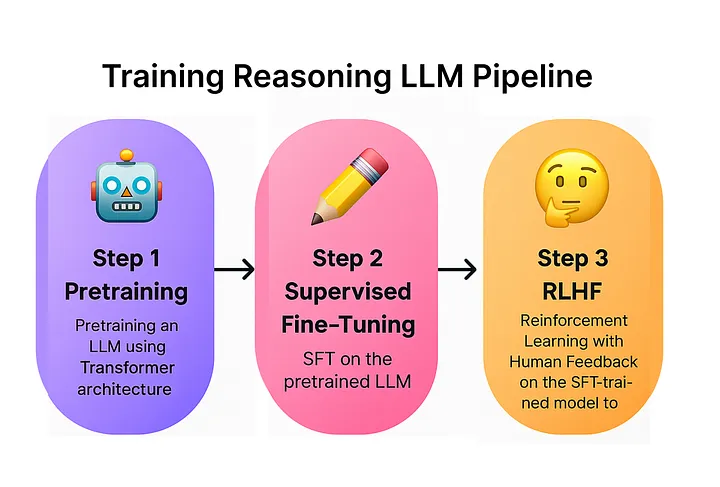

The Core Idea: Transformers at a Small Scale

Even with just 2 million parameters, a well-designed transformer can learn how to generate, complete, and even reason through text. These compact models use the same building blocks as their larger cousins:

- Token and Position Embeddings: Turning text into a form the model can understand.

- Self-Attention Mechanisms: Allowing the model to "focus" on relevant words.

- Layer Norms and Feedforward Layers: For smoother learning and generalization.

- Final Prediction Head: Producing the next-word predictions or outputs.

The key is to scale down wisely — using smaller embeddings, fewer attention heads, and shallower layers — while still retaining the core structure.

How to Keep It Under 2 Million Parameters

To stay within the 2M parameter limit, everything must be optimized:

- Use a smaller vocabulary size, especially if focusing on a specific domain.

- Limit the number of layers and attention heads.

- Choose compact embedding dimensions.

- Reduce the size of the internal feedforward layers.

Even with these constraints, your model can still learn to reason, generate text, and perform tasks if trained properly.

t include text paired with images, video, or sound. This enables the models to learn how different types of information relate to each other.Data: The Most Crucial Ingredient

What your model learns depends heavily on what it’s fed. Here’s what to keep in mind:

- Focus on high-quality, domain-specific data.

- Use clean, well-structured examples for reasoning.

- Chain-of-thought (CoT) style training helps the model learn to “think” step-by-step.

Q: If you have 3 apples and give away 1, how many are left?

A: You started with 3. You gave away 1. That leaves 2.

Answer: 2.

Even small models can learn this kind of logic — if trained on thousands of similar examples.